Event-Carried State Transfer Integration in Microservices

Good or Bad?

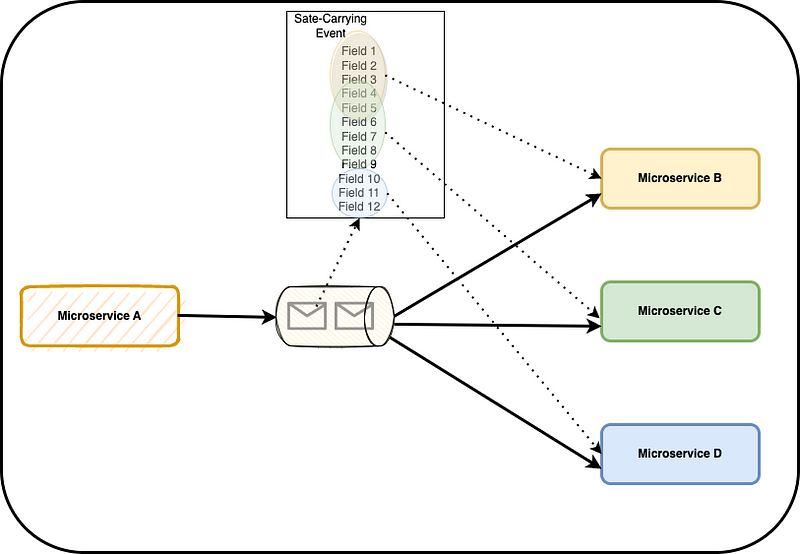

Event-Carried State Transfer is an event-based integration approach where events carry all the information consumers need to perform their functions.

There are benefits to using this approach:

- No temporal coupling to producers

- No unnecessary load on producers to request more information

- Reduced latency when processing

All the benefits stem from consumers given all the data they need, and they can use that however they see fit. They can immediately carry out their function without calling back the event's producer. They can even maintain a local cache of all the information they gather from the events they consume — although caching in this way has a few issues, as explained here.

However, there are many drawbacks to this approach:

Tight coupling

These events are tightly coupled to the consumers, and by extension, so are the producers. Hence, when consumers change, and they inevitably do, say by requiring more data not currently provided in the events, producers must bend to the consumers' will or change tact and resort to providing APIs for consumers to get the missing information which defeats the purpose of this approach.

Bloated events

A way of getting around this that I have seen, and implemented in the past, is to include as much information as possible in the event from day 1. This results in bloated events that are harder to maintain and version without properly addressing the issue long-term — they give a false sense of future-proofing as it's difficult to predict how microservices will change.

Complex cache management

Additionally, maintaining a local cache raises numerous issues stemming from ownership, or lack of, of the data. These range from how to cache the data, when to invalidate it, controlling who can view that data, security, permissions, and so on. I cover these issues in more detail in this post.

Low cohesion

Scattering the data this way also tends to scatter the logic, as logic usually follows raw data, leading to low cohesion. Although this data originates from business-focused events, hence it is not raw to start with; without proper governance, over time, the services caching the data may start treating it as such by erroneously taking ownership of that data, interpreting it in different ways, publishing it based on those interpretations and so on.

A real-world example

Years ago, I joined a bank where Event-Carried State Transfer and local caching by consumers were heavily used. Different services maintained and interpreted this data as they saw fit. At the surface, this resulted in a more resilient system; however, over time, the lines of ownership were blurred, and those consumers started adding their own logic on top of the cached data resulting in no less than five services maintaining customers' bank account balances, and most of them differed. Which of them is the source of truth? What is the customers' actual balance?

Conclusion

Is Event-Carried State Transfer good or bad? This may be your typical cliched answer, but it depends. To answer that question, you'll need to explore the alternatives and weigh their pros and cons and how those align with what you are willing to trade off.

Guidelines should be implemented to ensure data ownership is respected, high cohesion is maintained, and events are backwards compatible to ease their versioning.